10-30-2020 - Gear, Technology

HDR: What is it, and why do we want to shoot for it? A Conversation with Bill Baggelaar of Sony Pictures - Part 1

By: Jeff Berlin

SonyCine.com recently sat down with Bill Baggelaar to discuss all things HDR.

Bill Baggelaar is Executive Vice President and Chief Technology Officer at Sony Pictures Entertainment, as well as the Executive Vice President and General Manager of Sony Innovation Studios.

As CTO, Bill brings his extensive experience in production, visual effects and post-production along with his work collaborating with industry partners and internally across Sony in developing and implementing key technologies that have been instrumental, including IMF, 4K and HDR. He is responsible for the technology strategy, direction and research & development efforts into emerging technologies that will shape the future of the studio and the industry.

Prior to joining Sony Pictures in 2011, he was Vice President of Technology at Warner Bros. Studios where he worked with the studio and the creative community to bring new technologies to feature animation, TV and film production.

Bill is a member of The Academy of Motion Pictures Arts and Sciences, The Television Academy and is a member of SMPTE.

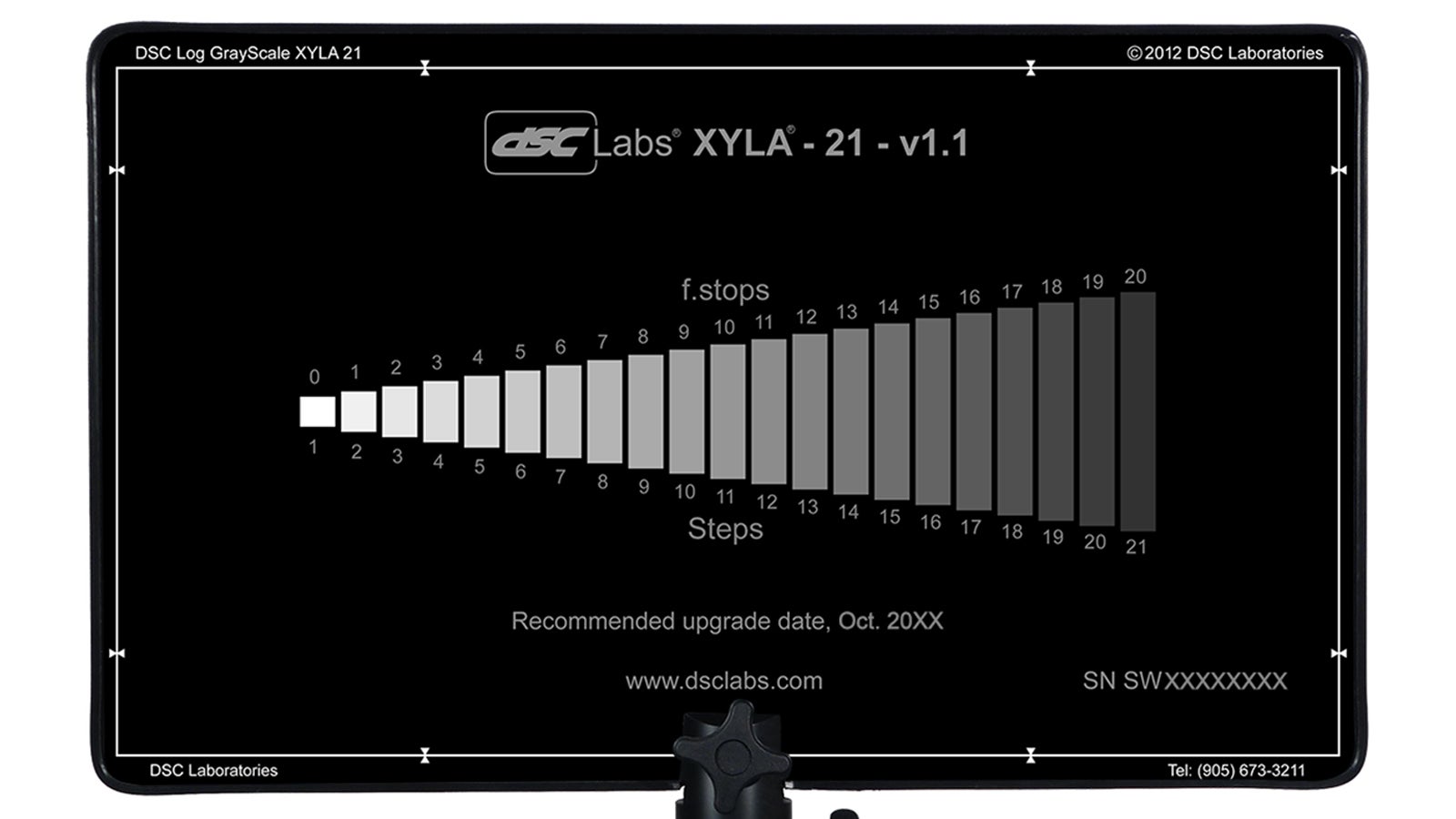

Xyla Dynamic Range Test Chart

Jeff Berlin:

What is HDR and why do we want to be shooting it?

Bill Baggelaar:

We have been shooting HDR for a long time for cinema. Film is an HDR medium. It's always been a high dynamic range medium and we've always had to squash it down into a much smaller display medium, whether that would be a 14 foot-lambert projection output or 100 nit Rec.709 output. You're talking about anywhere from 6 to 9 stops of dynamic range for a display, as compared to what the film is capable of - 12 to 14 stops of dynamic range.

So, we've always lived in a high dynamic range capture world. The intention of modern digital cinema cameras is to replicate film and they have typically been in that 12 to 14 or 15 stops of dynamic range. And we've also got cameras that are going even higher in dynamic range but it's an interesting question, what's high dynamic range. We consider higher than 12 to 13 stops being high dynamic range capture. Obviously, displays have a varying range of high dynamic range and ultimately, what we're after is better blacks and brighter highlights, and being able to utilize that range in between as creatives see fit.

I think for the average consumer, when you're talking about HDR you can get 600 nits peak brightness with significantly better deep blacks. But you can go even higher, 800, 1,000, 1,500 nits, these become very bright specular highlights, but it's more about how you manage that dynamic range and color in the content. You can still make a very low dynamic range image within this high dynamic range space and it can be compelling, just as you can also make something that is just very bright and isn’t compelling at all. It's really how you use the space. Think of HDR as a bigger palette of light and color. You now have more colors, and more detail in highlight colors that you're able to represent to the viewer if they have an HDR display.

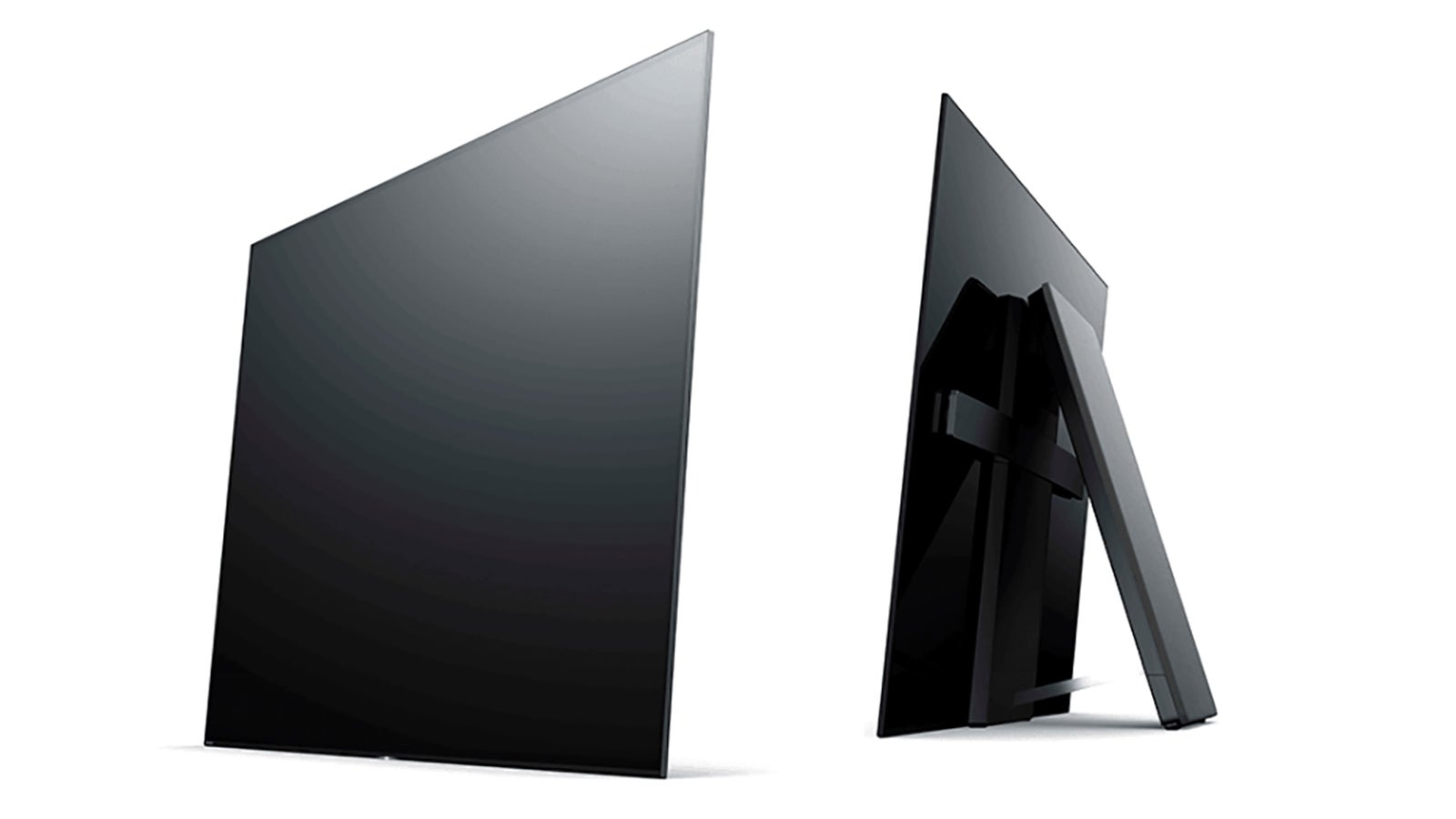

Sony A1E OLED HDR TV

At the end of the day, why we want to capture HDR and be able to produce HDR content is for the benefit of being able to present a picture that is more creatively the way that somebody intends it to be. I can get better representation now in an HDR consumer display of what the creator’s original intent was.

Sometimes this means brighter colors. Sometimes it means better blacks. Sometimes it means I've got the whole palette that people can kind of go crazy with and really push things out because that's their intent. But that is hard to represent if they are limited by typical projectors or by a 100 nit SDR (Standard Dynamic Range) display.

Really, it’s a creative tool and I think you're now seeing that the adoption of HDR isn't always just about blowing things out and making them bright. It's about, How do I use this new palette of color and light to my advantage to tell a story? Because HDR is a creative tool, just as the camera, lenses, lighting, etc. are all part of the story telling process.

Jeff:

Right. So speaking of telling stories and creator’s intent, as an aside, I have a Sony A1E OLED HDR TV at home and love watching HDR content on it.

Bill:

I have the same display.

Jeff:

I'm assuming you have Netflix?

Bill:

Of course, yes.

Jeff:

When you select a Dolby Vision program on Netflix, you see the TV snap into HDR mode. What are we seeing nits-wise, when the TV goes into HDR mode?

Bill:

You're seeing the TV switch from an SDR gamma. In comparison to HDR, SDR blacks are a bit lifted even on an OLED display where the blacks are very good. You're switching into a mode that allows the dynamic range of the content to be better represented on the display.

And it becomes a bit techy in exactly how that difference is sent over an HDR signal; that it is a log-type curve that allows better representation of the signal to the display. Essentially the idea behind HDR10 and Dolby Vision today is that if a colorist sets something on their mastering display at 10 nits or 500 nits, then that information gets carried through the signal and gets represented at 10 nits or 500 nits on the consumer’s HDR TV.

Now, not all TVs would necessarily be able to represent that thing at 500 nits but it will try to represent it as close as possible. The intent is to basically have direct signaling between what a creative says this should be to what the display should output.

And then, anything where it can't do that on the high end or the low end, it would tone map so that it can make the blacks look reasonable and make the highlights look reasonable so they don't just clip off.

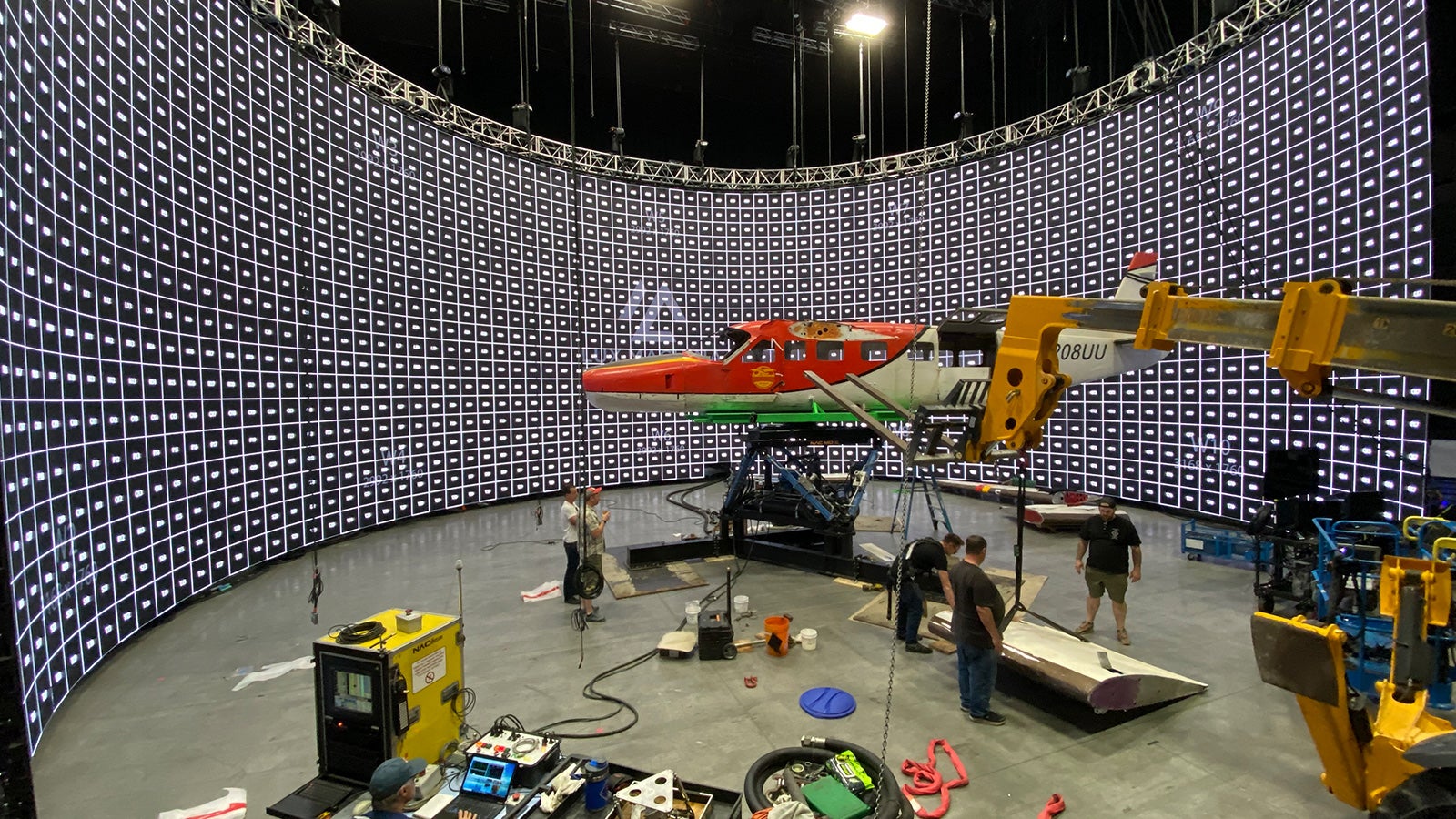

Sony VENICE Rialto build

Jeff:

Right. Let's talk about camera requirements.

Bill:

The Sony F55, the FX9 and the VENICE are definitely all HDR-capable cameras and a lot of the content that we shoot at Sony Pictures is shot on these cameras. For episodic television the F55 has been a workhorse. Some shows have also switched over to VENICE as their primary camera for episodic work.

For feature work, there's still the F65 which has a sort of niche place but that is definitely an HDR-capable camera. And the VENICE has become popular in the theatrical space as well. A large majority of Sony Pictures content is episodics shot for television and streaming outlets, and a majority is shot on the VENICE and F55. We don’t do much live broadcast at Sony Pictures, but for broadcast TV, the FX9 and some other Sony broadcast cameras are employed where they have live sports and other events that can produce HDR.

Jeff:

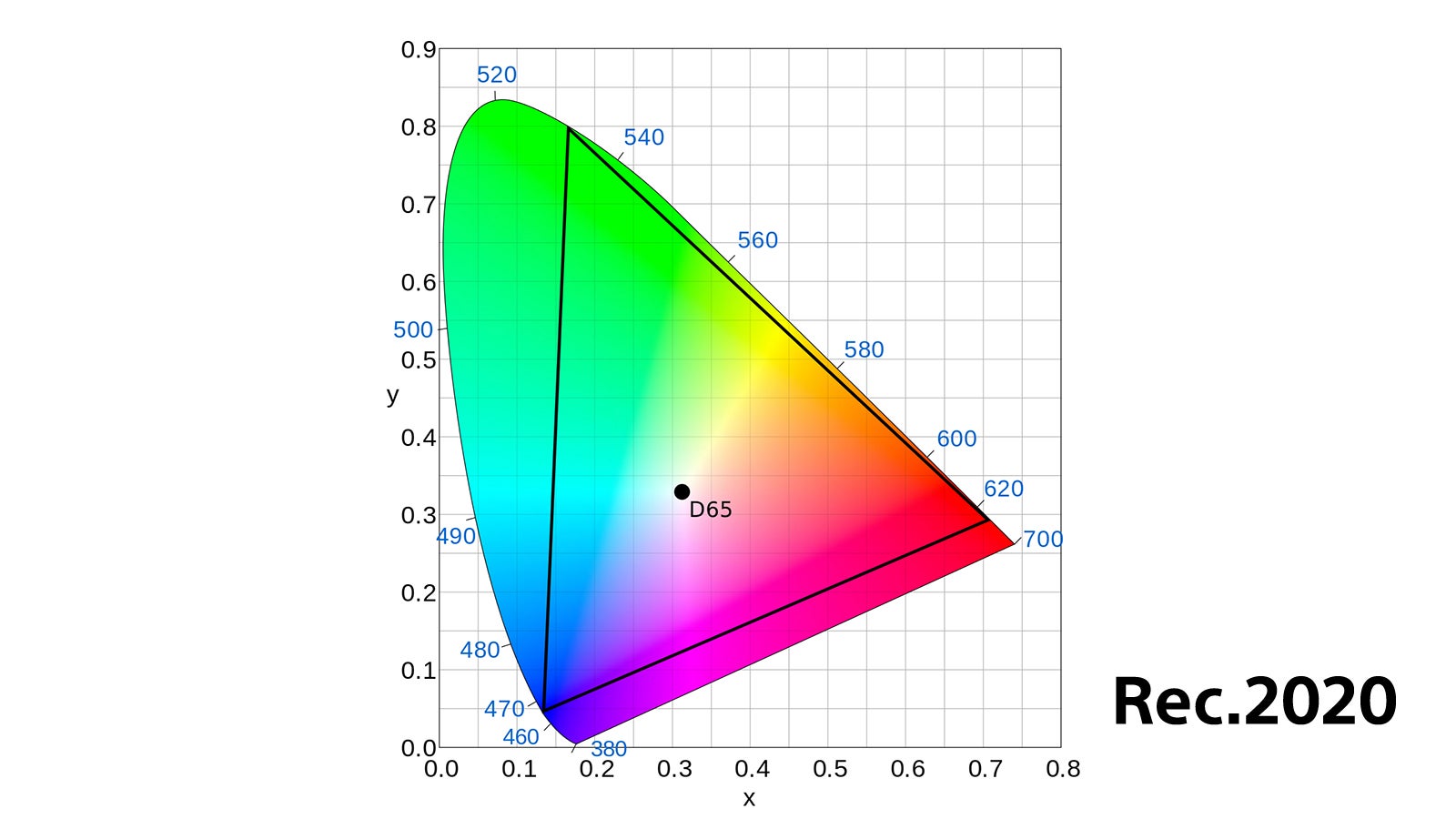

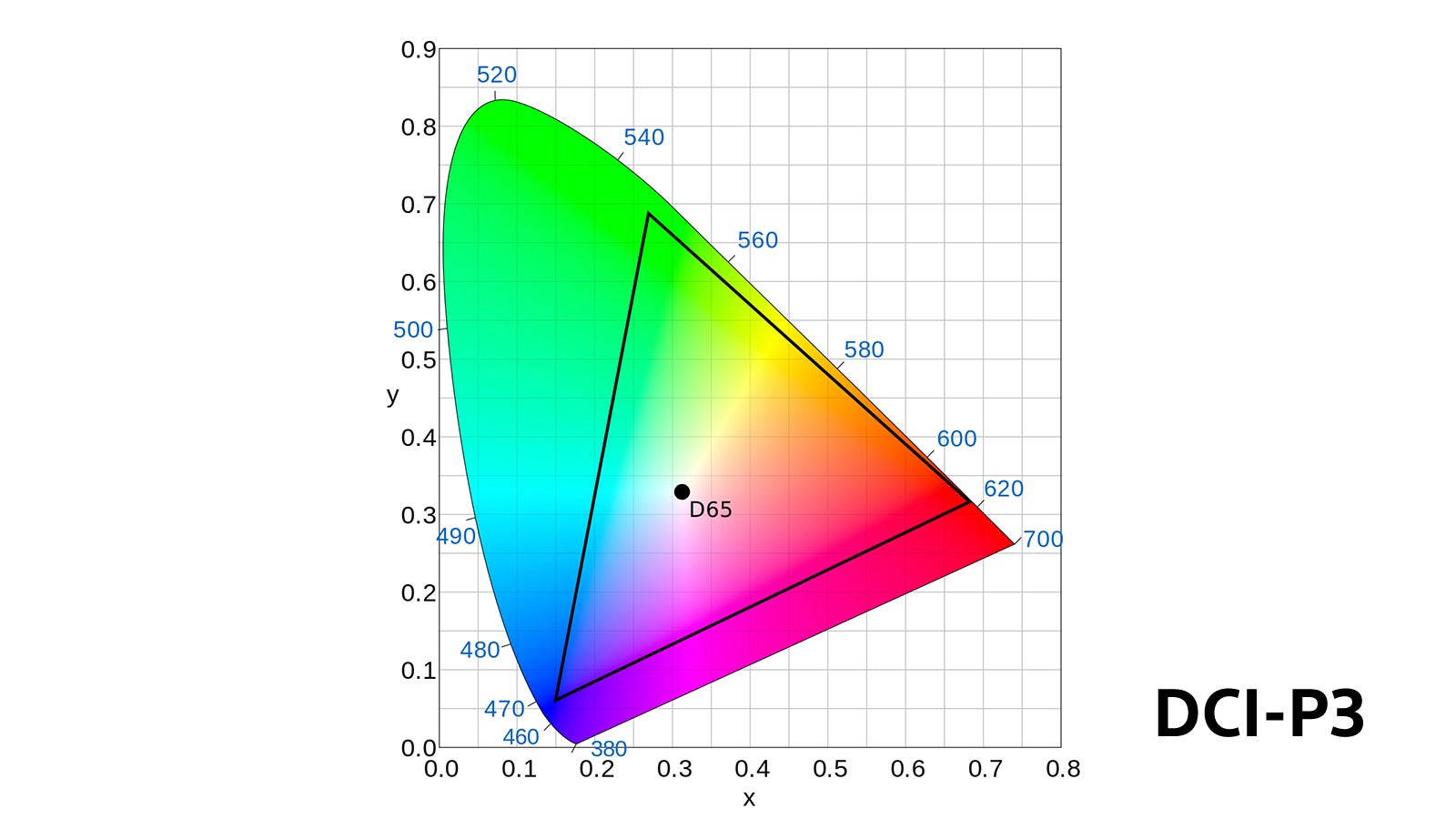

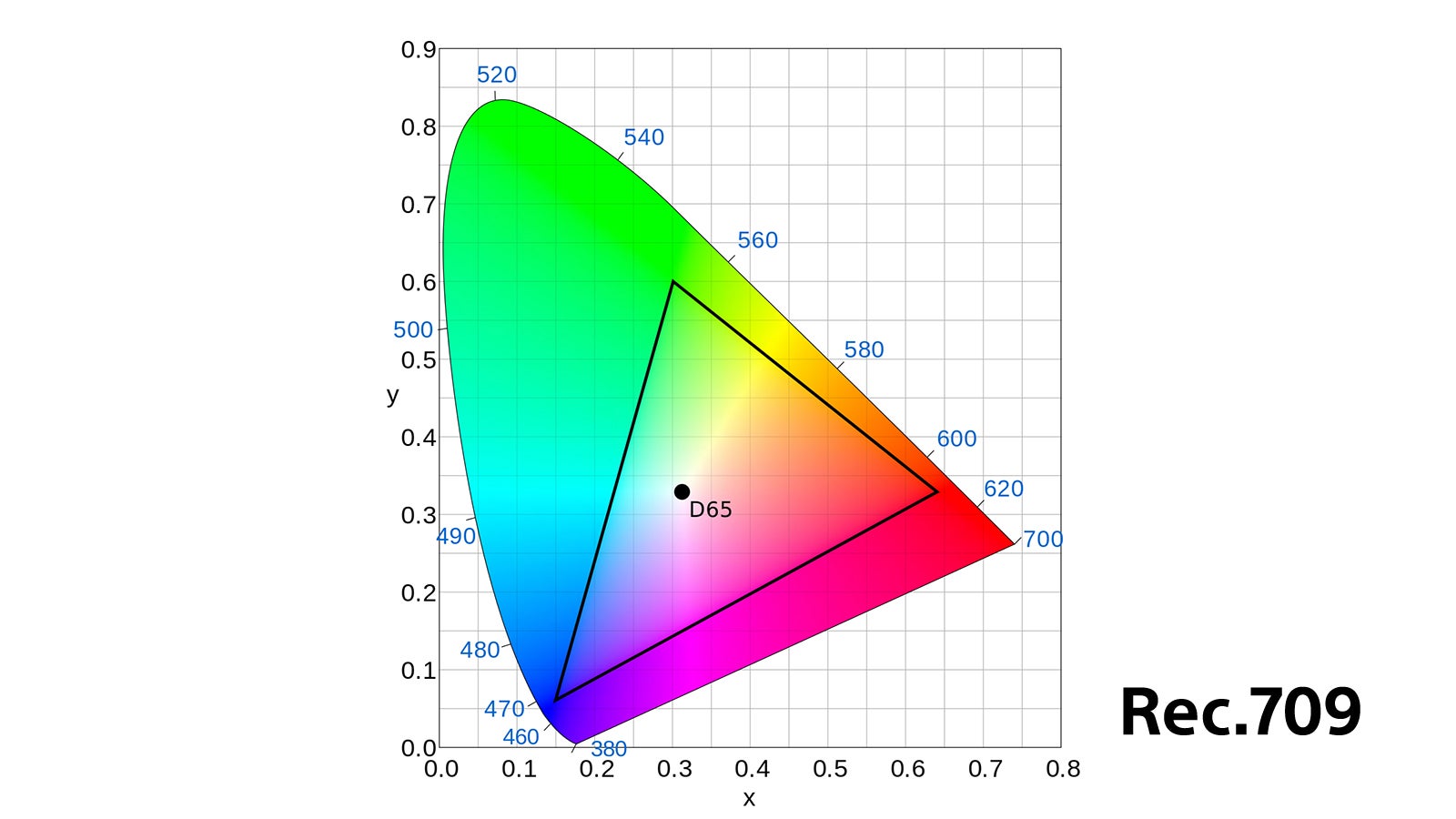

Obviously, we're talking about shooting raw in X-OCN, for example. And of course there’s Rec.2020 and its wider color gamut.

Bill:

The good thing about modern cinema cameras is that most of them have very wide dynamic range. They also have wide color gamut. Largely, they now have higher resolution than HD sensors, anywhere up to 4K, 6K and 8K, and now, getting even higher. But for the Sony cameras, which have their own camera color gamut, shooting in S-Log3, S-Gamut3.Cine is typical, and it’s a very wide color space, wider than most displays can produce, and creates a great HDR output.

Today, we are really, practically, constrained to P3 due to current HDR display technologies. We aren’t able to shoot and display all the way to the edge of Rec.2020. And so, by having a wider color gamut in the camera, what we're able to do is represent P3 color very accurately.

So, by having all of that color gamut in the camera, I can start to push and pull and do different things in a color correction suite without detrimentally affecting the image quality, which is really what we're after – giving a whole big bucket of color information so now I can massage the image the way I want to creatively tell my story and still make sure that it’s within the P3 space because that's really what TVs are capable of.

To represent true Rec.2020 colors, the full gamut of Rec.2020, we need lasers. Until we have the technology to do this for consumer displays on a cost-effective basis, we are constrained in the P3 space and the cameras which today allow us to represent that space. If we need to go back and remaster for a wider color gamut, we can do that down the road if necessary.

Jeff:

And obviously, bit depth has a lot to do with that as well?

Bill:

Absolutely. Bit depth especially is a huge component with cameras shooting raw, getting 16-bit linear data out. 14, 15, 16 bits of color from the sensor really is necessary to create those smooth gradients and to capture that color and detail from these very large sensor cameras.

Jeff:

And HLG?

Bill:

HLG is a really important delivery mechanism for a lot of broadcasters. HLG was designed for live and broadcast TV, specifically. Broadcasters aren't limited to HLG, but it's sort of a compromise between being able to create a broadcast-capable HDR system without the complexity of what is traditionally called the PQ system, what we master our theatrical and episodic content in.

In the U.S., on DirecTV and other outlets, on their live channels there will probably be HLG delivery, but PQ is being used for video-on-demand content and for Amazon and Netflix. I don't think Hulu is HDR yet. But Netflix, Amazon and Apple TV+ are showing movies that are delivered in PQ.

They can live side by side, PQ and HLG. I’ve always looked at it this way - we master content in PQ to ensure we have a very defined output, and then how you deliver it depends on the pipeline. If it's best to convert to HLG to get it to the consumer, fine. If you keep it in PQ, that's also fine. Dolby Vision adds some metadata on top of PQ to help TVs reproduce the signal more accurately, that’s fine too. Other types of metadata can be added to the PQ system as needed down the road, if needed.

That's one of the differences too, that PQ can have these additional enhancements of metadata, HLG was designed to have no metadata.

Jeff:

Define PQ.

Bill:

PQ is perceptual quantizer, but ultimately, I think it's better to discuss the differences between PQ and HLG. PQ is a directly specified luminance value whereas HLG is a relative luminance system.

In PQ, I'm able to say, "Here's a pixel. Here's the color value, and I want this pixel displayed at 50 nits or a 100 nits or 150 nits." And the display is supposed to represent that as best as it can at that luminance value.

From a creative standpoint, this is why we master in PQ, because we know what we are seeing on the master display is exactly the value that we want it to be and therefore, downstream, it should get represented that way, as well, as best as it can.

It's the direct correlation between the value that I'm seeing and mastering at, and then being able to get that downstream to the consumer.