06-21-2021 - Gear, Technology

Why Sony’s Hybrid Autofocus Technology is So Reliable

By: No Film School

We pull back the curtain on Sony AF.

In collaboration with No Film School.

Autofocus has become an integral part of production workflows because of its speed, reliability, and precision. Sony is one of the companies at the forefront of developing autofocus technology that we can lean on in the field.

As resolution increases and higher frame rates are more common, the algorithms of autofocus are able to track subjects with ease with pinpoint accuracy. Sony uses a Fast Hybrid AF system that combines phase detection autofocus technology with contrast detection autofocus to deliver tack-sharp images even in low light conditions.

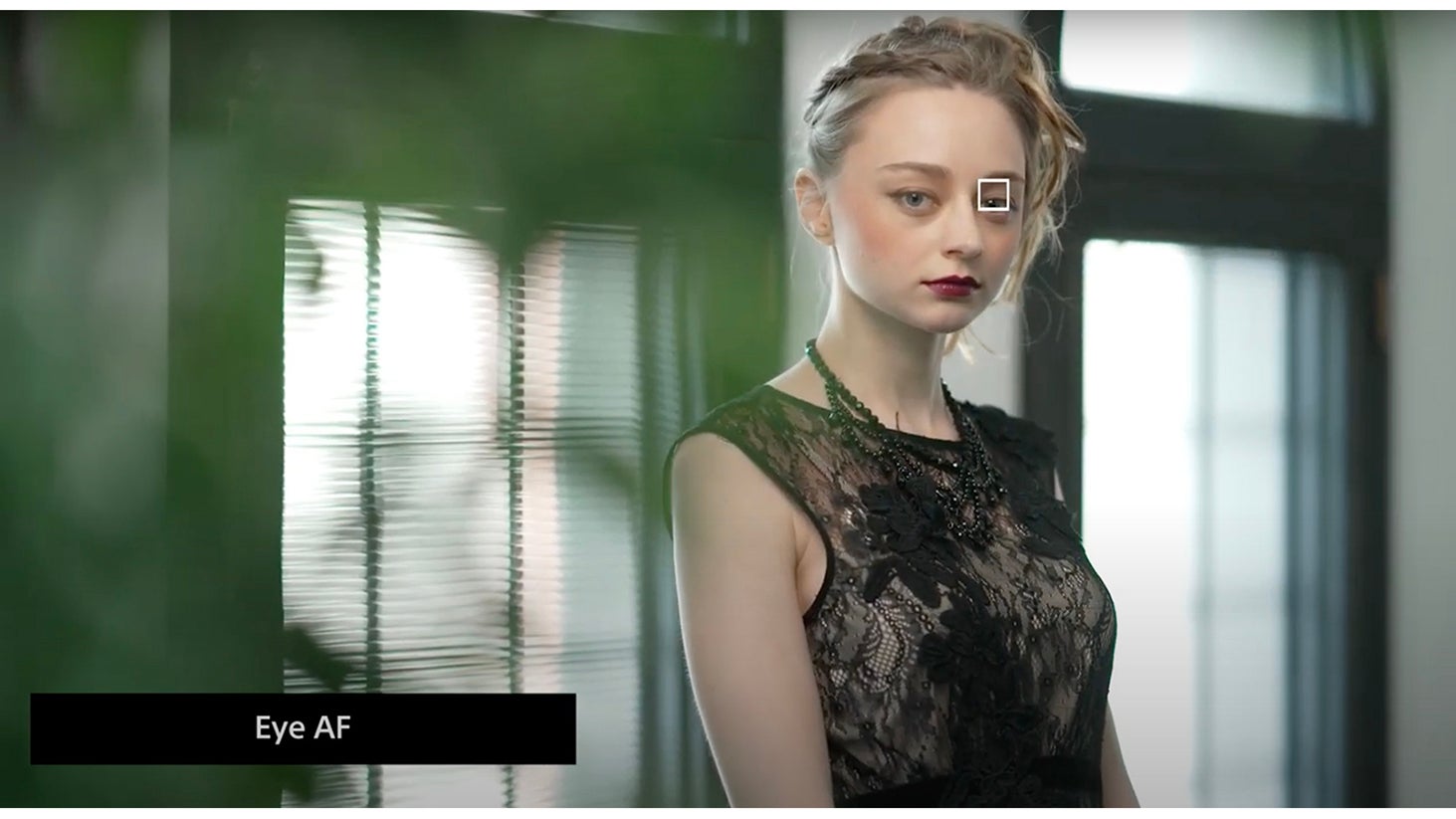

The last decade we’ve seen new technology emerge like real-time Face Detection, Eye AF, and Animal AF. We spoke with Mark Weir, Sr. Manager Technology at Sony, about the history of Sony autofocus and where it's headed. Here’s what he had to say.

No Film School: Sony autofocus is one of the best out there. What makes it so reliable in comparison to others?

Mark Weir: Manufacturers like Sony who are designing, engineering, and manufacturing the camera, image sensor, lenses, have a substantial advantage in terms of advancing autofocus technology. But on top of the hardware there’s also the algorithm. It’s a combination of those things, the image sensor, the camera, the lenses and the algorithms that control these.

The cameras can only perform as well as the lenses can keep up with them. And the lenses have a responsibility with the camera to keep up with the instructions and also to provide accurate information on the image sensor.

NFS: That makes total sense. If a company has its hand in all three, there can be cross pollination and development. Curious, in the early days of developing AF for mirrorless cameras was there a breakthrough moment?

Weir: Mirrorless technology or the way mirrorless camera work and operate represent an enormous advantage what cameras had to deal with in the past. Bringing the phase detection sensor to the image sensor was really one of the major breakthroughs from a hardware perspective. Without that most of these benefits would not be possible.

When phase detection was first developed in SLRs, it wasn’t done on the image sensor but separately. That was one of the things that separated still cameras from video cameras and that separate sensor was prone to errors since the film plane and the focusing plane was not the same thing.

By developing image sensor technology such that it could not only be conducted at the film plane, but also developing devices that could operate fast enough and process the data fast enough, such that the work could be done quickly enough on the image sensor was another breakthrough.

If the sensor is busy capturing the image, it’s not going to do a good job measuring phase or vice versa if it needs to be able to measure subject-to-camera distance many times per second as it does. It’s going to require throughput not only from the image sensor but the processor at prodigious rates. It's really a combination of the device technology as well as the algorithm necessary to use the information to get it all done.

NFS: Phase detection is a big part of autofocus, but for Sony, so is contrast detection autofocus, which makes Sony’s Fast Hybrid AF. Was Hybrid the first thought for Sony from the start?

Weir: In video recording, before phase detection was brought to the image sensor itself, you could only manage contrast detection. I’d say about the same time phase detection was realized, so was Hybrid AF.

NFS: The combination is an absolute gem. Do you recall a moment when Hybrid AF made its mark?

Weir: I remember when the Sony a7R II was introduced and it was really a eureka moment as several journalists using the camera quickly realized something that we didn’t even popularize – that the a7R II could focus manufacturers’ SLR lenses faster through an adapter than SLRs could.

I remember reading about that in the press, saying if a Sony camera can do that then that’s a big step further.

NFS: Lens adapters have really revived old lenses and allow us shooters to switch across platforms. But mirrorless lenses are built so differently than SLR lenses, it’s hard to compare the two anymore.

Weir: It didn’t take long for Sony to realize that lenses built for SLRs could not compete with lenses built for mirrorless cameras. Not only in terms of their optical formula and optical geometry, but SLR lenses don’t take advantage of the short flange distance of mirrorless cameras. But also, it was quickly discovered that lenses that were to truly take advantage of mirrorless cameras would have to also develop new mechanics to take advantage of the speed of mirrorless cameras.

NFS: Can you talk about what makes the Sony mirrorless lenses different when it comes to autofocus?

Weir: When we started developing mirrorless lenses we were using rotational stepping motors which needed their motion turned into linear motion. That’s pretty much where other lens and camera manufacturer of mirrorless are at today.

But one of the things we discovered around 2014 was that we had to rethink the way autofocus actuators worked. That’s when we started creating linear actuators which have no translation from rotation motion to linear motion – there’s no gears and no friction.

Even today, most the recent lenses introduced by mirrorless camera manufacturers or third party lens manufactures are using rotary actuators. The helical mechanism or gears required to convert rotary to linear movement slow them considerably and can induce error and noise.

We shifted to linear actuators 4-5 years ago.

NFS: Well that tells us a lot as to why Sony mirrorless lenses are so good.

Weir: It’s not just precision but speed. In the old days, we lived in 24p, but now we live in a world of 4k 120p in mainstream cameras. You don’t have to spend $50K to get 4K 120p slow motion. If you can’t execute focus movements dozens a time per second you’re not going to be able to use autofocus with wide aperture lenses on high frame rate cameras.

The FX6 and FX3 cameras have the ability to capture a shallow depth of field with wide aperture lenses due to their full frame image sensors, and are able to deliver the autofocus necessary. It’s quite a challenge to pull focus at 120p when the depth of field is measured in fractions of an inch.

That’s part of the revolution. The autofocus systems are capable enough in these challenging situations that they are starting to take over. Especially in the world in of solo shooters.

NFS: Couldn’t agree with you more. Glad you brought up the FX series. The FX9, FX6, and FX3 all share the same hybrid autofocus technology as the Alpha series, right?

Weir: It is very much shared technology and it’s very much in the same direction. We believe that Hybrid AF that combines the speed and the tracking capability and object recognition of phase detection autofocus is becoming more and more an essential part.

Contrast AF is great to have due to its precision, but by its very nature, it’s challenged in terms of speed. It’s depth finding but it’s not depth aware. And the very nature of depth awareness itself gives phase detection an advantage when it comes to knowing which direction to go in. Something that contrast detection can’t practically do.

NFS: Can you talk about how Eye AF and Animal Eye AF have evolved?

Weir: My understanding in the way that it's implemented is that Eye Detection is an extension of Face Detection.

Face Detection was demonstrated as early as Photokina 2009. It’s looking for certain characteristics of faces and more recently, as the technology developed face detection advanced using artificial intelligence so it’s not only was it detecting the characteristics of a face, but also referring to an image database, and by using that, it could advance the effectiveness of its object and subject recognition.

The notion of Eye Detection I think is the next step in that process. I think as we implemented it in a number of different devices Face Detection and Eye Detection is being augmented with other information like color, depth, movement, etc.

When we see the system for Eye Detection that goes onto animal or bird AF, what we are seeing is slight variance in the way the algorithm works and probably slight variances in the database into which the intelligence is referring when it’s doing the tracking.

NFS: Can you talk about what’s next for Sony autofocus?

Weir: I’m really not engaged in the development cycle. But I think most development is aimed towards responding to the requests of end-users.

And if we look at the requests from end-users, it would be things like higher performance, more positive identification, more effective tracking, and the ability to regulate the stickiness of the tracking. Whether it ignores or responds to other elements or objects that come in the camera and the subject that’s being tracked. All those are probably high on the list. And we are doing those already. We already have the ability for users to adjust the tracking sensitivity with the FX9, FX6, and FX3.