10-22-2020 - Gear, Technology

Cine Lens Metadata - An Overview - With Snehal Patel of ZEISS Lenses - Part 1

By: Jeff Berlin

Snehal Patel is a film and television professional with over two decades of experience creating content and adapting new technology. He started the first Canon Bootcamp in Los Angeles during the Canon 5D DSLR craze and has over twenty years of experience in cinema.

Snehal has lived and worked in Chicago, Mumbai and Los Angeles as a freelance Producer & Director. He was a camera technical salesperson at ARRI, and currently works as the Sales Director for Cinema at ZEISS. He represents the Americas for ZEISS and is proud to call Hollywood his home.

This is Part 1 of 3 parts - an overview of smart lenses, lens metadata, protocols, and why this technology should be on your radar.

For more information about ZEISS cinema lenses, click HERE.

ZEISS Supreme Prime with data port and contacts visible in PL mount

Jeff Berlin:

What is lens metadata?

Snehal Patel:

Lens metadata refers to information that comes from what's considered a smart lens. The information is generated by sensors inside the lens. That information is then conveyed through a language and stored in a database on the camera side or on another connected device. When you power up a smart lens, you're powering up the sensors, which are recording relevant data.

There are different ways to communicate this information. A lot of companies developed protocols. If you buy a Sony still lens, then you have a Sony protocol, but in PL mount world, cinema world, the two major protocols are Cooke/i technology and ARRI’s LDS technology. These are two languages that are used for collecting information and storing this in a database.

So, let's take a look at Cooke/i. /i is more ubiquitous because it's really easy to license. Cooke only charges a manufacturer a nominal yearly fee of one euro a year to license Cooke/i technology.

Jeff:

Wow. Well I would be willing to defray ZEISS’s cost on that next year.

Snehal:

[Laughs] The one euro a year basically makes the system not completely open source, so not everyone can have it. You have to be an approved company, but there's very few companies that get disapproved, and it is technically still a licensed technology. You have to be a licensed partner to be supported by the infrastructure, but it's almost open source in the sense that if you wanted to, you could add information or use the language to do things that other manufacturers are not doing. You can have manufacturer-specific things that only you do.

Wooden Camera Arri LPL to Sony VENICE adapter, contacts for smart lenses are visible

Snehal:

If you look at lens communication, when a lens has sensors in it and communicates information to a camera, a Cooke/i protocol specifies certain things that you have to communicate. This is very similar to LDS, ARRI’s system. The lens must tell you the lens and manufacturer's name, the focal length, the firmware version it’s currently running, the entrance pupil position, and in real-time, T-stop value and focusing distance.

If the lens focus ring is calibrated correctly, the camera knows exactly what mark you are on for your focus and your iris value, and then for a zoom, it would also be the zoom value, the focal length of the lens. This information is required. You have to provide this information. Based on the lens’ entrance pupil position and sensor information, cameras will actually calculate depth of field based on an agreed upon circle of confusion setting.

This is the information you want to get from the lens; it has to give this information at a minimum. Cooke/i also allows for extra things to be added into other columns of info. Right now, on the new specification of /3 or i3, they even have labeled these extra columns, like shading and distortion characteristics, et cetera. What we did was ZEISS literally took one of these optional columns and dedicated it to our information, our characteristics. The two major things that we're tracking aside from T-stop value and focusing distance... what we call extended data, extra data ... is shading and distortion characteristics.

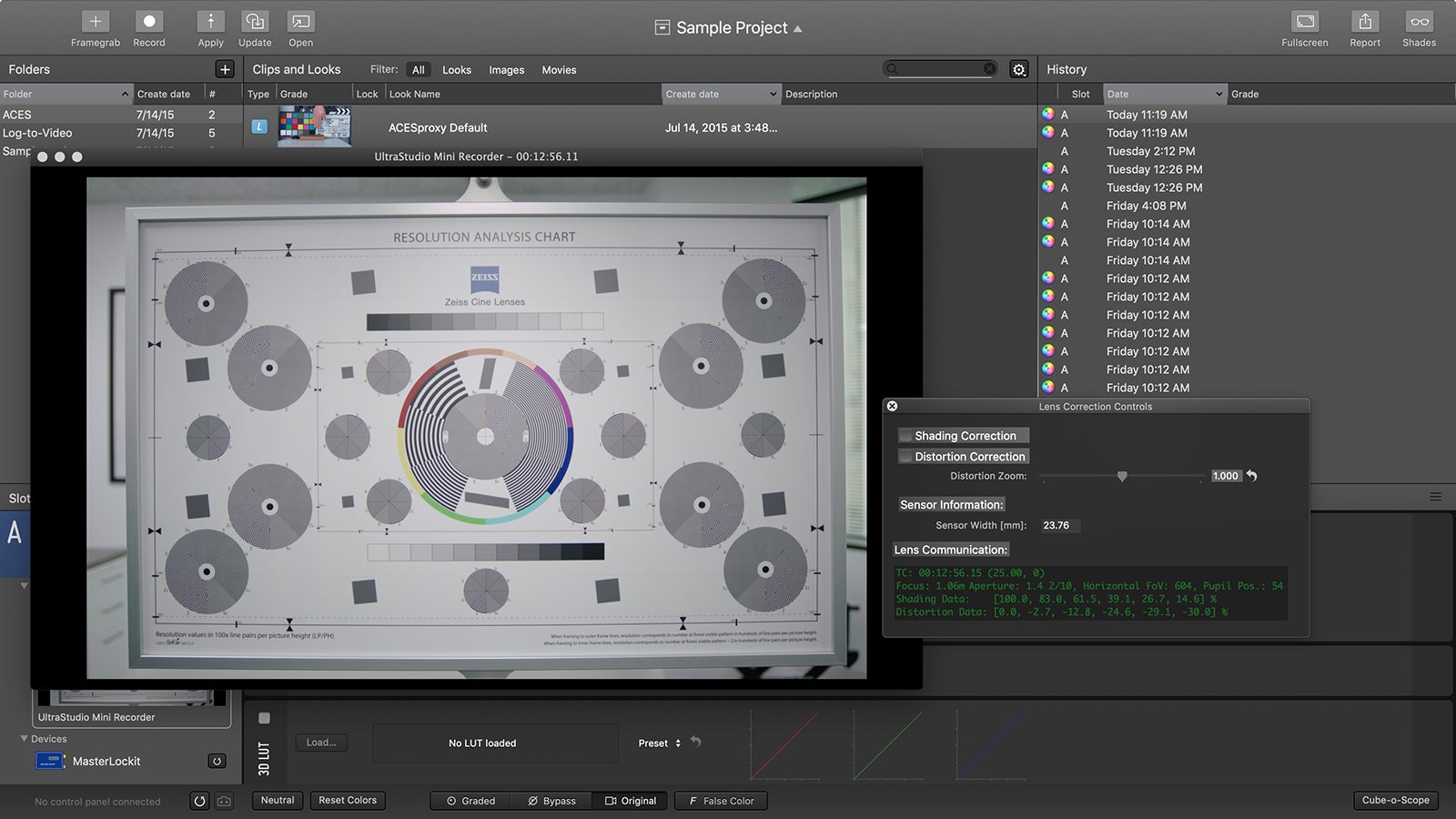

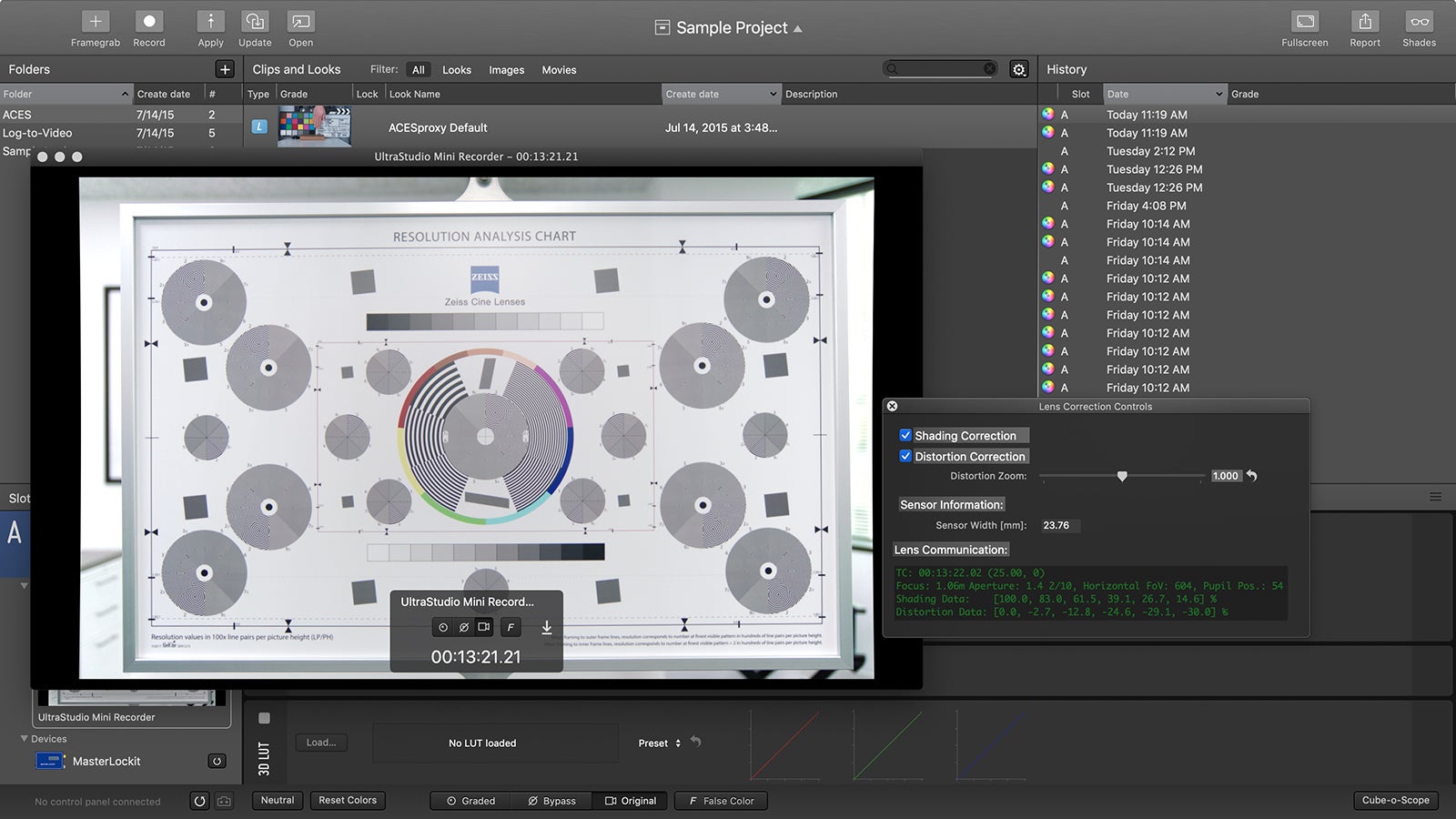

Before application of shading and distortion corrections

Snehal:

In the future we could include even more things. The shading and distortion characteristics are important, because they're used for lots of different applications. It's not just one type of visual effects application, it's multiple visual effects applications. It's also very important for live virtual production, because you need it when you're calibrating with a live camera that's tracking camera movement. Lens profile is necessary for that. You can use the data for stitching things together, for set extensions, for green screen work. There are so many different reasons where you can use shading and distortion information.

So, to summarize, lens metadata is data that is communicated from the lens, part of which is fixed information, like the focal length and the lens manufacturer, serial number, firmware update, firmware version. Some of it is dynamic data, like focusing and iris distance. You're now also starting to see more dynamic data, like lens distortion, lens shading characteristics, and other things still to come. This is all what lens metadata is. Not the metadata of the camera, just the lens.

With correction for shading and distortion

Cooke lenses with external connections and smart lens mount

Jeff:

A lot of PL Mount cameras don't have any kind of smart connections in the PL Mount. In those cases, how is this information being collected?

Snehal:

The good thing about Cooke/i is that it allows for an external connection point on the lens. You'll notice that any Cooke/i lens from Cooke or from ZEISS, and even now Fuji, has an external connector from which you can get the exact same information as from the pins in the PL Mount, so you can use either one. LDS lenses are limited, because the camera's meant to have a smart mount, it's counting on that.

The ARRI system has been a bit more proprietary until now. With ARRI LDS 2, they want to open it up to more manufacturers, but ARRI LDS was very closed initially, and it was only ARRI cameras, ARRI lenses. The idea was the camera always had a smart mount. We say that, but when the Alexa first came out, there was the Alexa Classic and the Alexa Plus. The Alexa Classic didn't necessarily, when it first came out, come with the pins. This was an issue, because then you couldn't use LDS lenses with it. I think they updated that now.

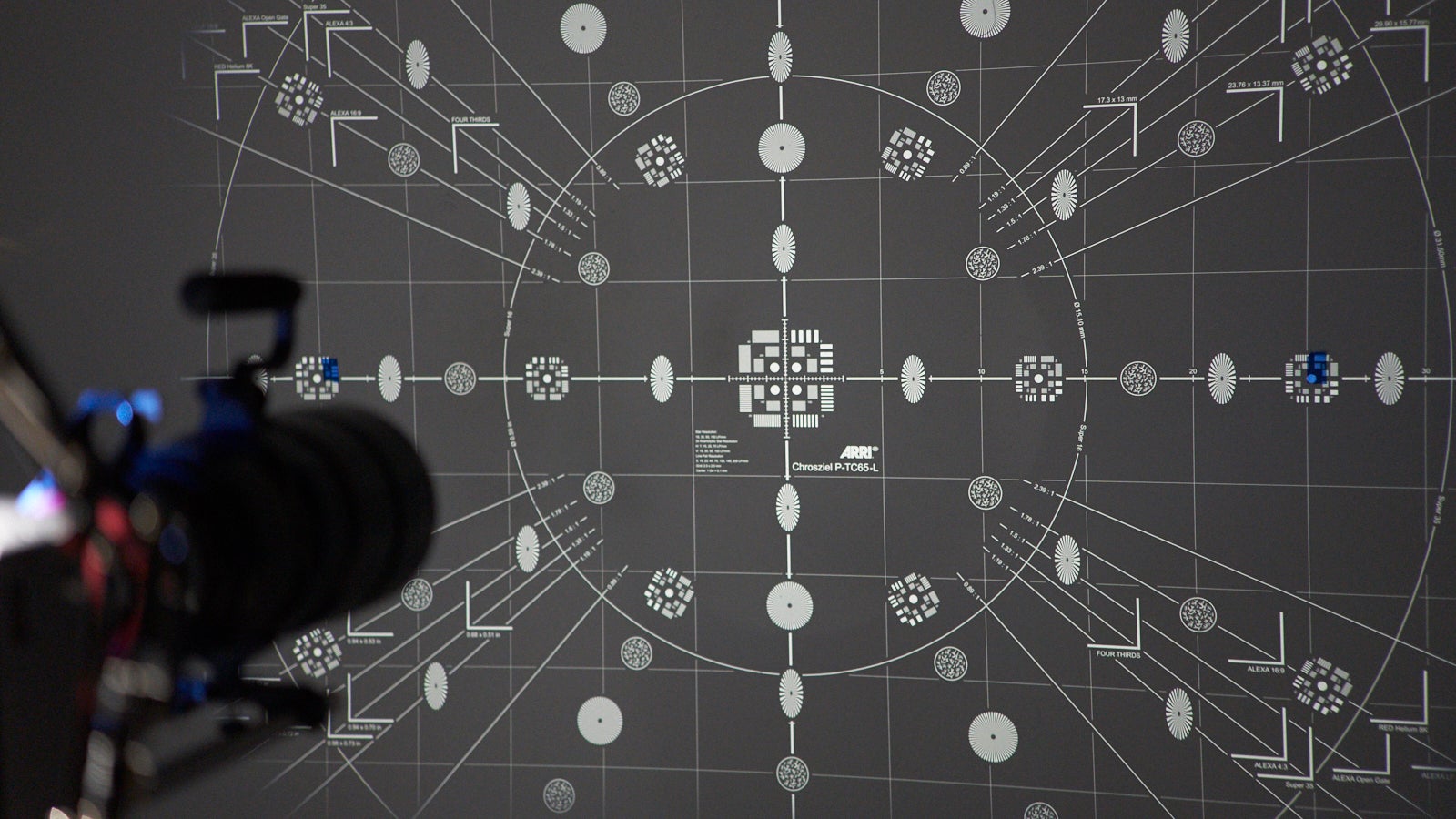

Lens on a projector displaying image circle and shading

Jeff:

On the VENICE, the PL Mount is a smart mount.

Snehal:

The best part about our system right now is that there are two camera systems that record directly into the camera without an external box and one of them is Sony VENICE. It records not just the required data that Cooke/i requires, but also the eXtended Data, the extra data that ZEISS provides for shading and distortion characteristics.

Jeff:

Where is this info stored?

Snehal:

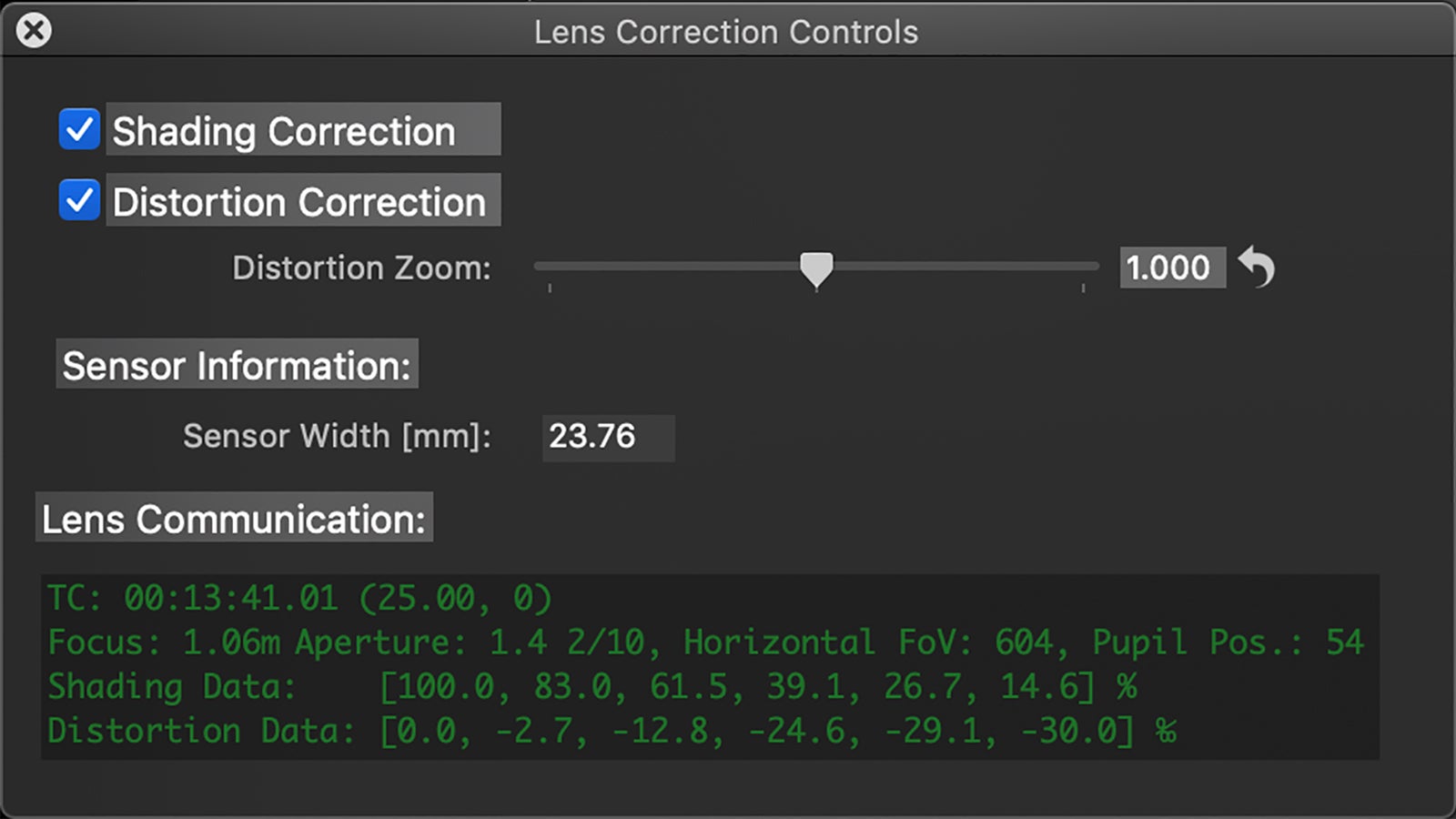

On the camera side, it's just another piece of metadata. You don't need to have it frame by frame. You only need to capture a key frame when something changes. If your focus and iris are not changing, the data's not changing. There's nothing to capture, right? It's only when you move the iris or focus ring that it notes that you may have movement and changed something. That's when there's a transformation in what’s recorded.

What's really interesting about these two extra pieces of information that we're tracking, shading, for example, is quite dynamic. If you open up the lens all the way, a Supreme Prime let's say, if you’re using a camera with a huge sensor, there's easily going to be a stop to a stop and half difference between the center and the edge of the frame when wide open. As soon as you go down to T4, that goes away almost entirely. T5.6, it's quite even, where you'll still get 85% of exposure value at the edge of the frame compared to the center.

This makes a big difference in VFX. If you were to composite an object moving across the frame, there would be a huge difference in the way that object looked if you were at T5.6 versus a T1.4 or T1.5. This is where VFX needs this information. Distortion: the wider a lens is, the more distortion it's going to have. Even if it's rectilinear, even if it's designed to be super straight, the thing is you can't be straight for all purposes for everything. There's always some kind of bend. The wider the lens, the more chance there is for that.

This bend changes as you focus a wide lens, and your distortion actually shifts quite a bit. Perceptibly, you might not see it on the small screen or on a monitor, but as soon as you put it on the big screen, you're going to see it really clearly, that the distortion is changing.

Data with focus, aperture, shading and distortion info

Jeff:

Interesting. So how does this translate to the full frame VENICE sensor?

Snehal:

With the VENICE sensor, the diagonal is not as big as some other cameras, so, you're going to have a little bit less of a fall off because you're looking through more of the middle of the glass. If you imagine the diameter of the glass, at some theoretical point of diameters, as big as you can get before it portholes, that's your image circle that's being projected onto the sensor, regardless of how big the sensor is. The sensor, depending on its size, sees more or less of this image circle.

Jeff:

And how do I give all this data to the post house?

Snehal:

That's the best part. When it's embedded into the video files, it's never gone. It's always in your camera originals and you don't have to even extract it when you go to edit. The way we have it right now, we have different methods of what you can do with the data. On the high end, if it's a camera that captures the data... let's look at Lovecraft Country, the high budget HBO show shooting on Supreme Primes and VENICE. They captured the data internally. They still did lens grids to make sure that the data worked, but they use the recorded data quite a bit. Now, the best part is, because it was recorded in their video files, it's always going to be their camera original.

You can edit, create an EDL, and you can go do the normal VFX process of a VFX editor, who would ask for pulls from the DI house for specific shots, providing in and out points in the timeline. The DI house would go back to your camera original and do an export into an OpenEXR image sequence. When they do have this image sequence output ready, ZEISS now has command line software to extract the extra data, the shading distortion characteristics, and the focus and iris position, all of that info from the metadata of the original video files and inject it into the OpenEXR file headers.

That means that when the VFX person gets it and they use NUKE, which is the industry standard compositing software, we have a free plugin in NUKE that allows you to apply these data characteristics.