01-14-2021 - Gear, Technology

HDR - What Does it Really Mean? - Part 1 - The Tech Behind the Look

By: Alister Chapman

Alister Chapman is a DP, editor, producer, educator, and is very well versed in technology and all things camera and video related.

To learn more about Alister Chapman, visit his website: xdcam-user.com.

We have all heard the term HDR, for High Dynamic Range.

And I am sure that many of you may already be producing amazing HDR content, but there are a lot of people who are either confused by HDR or have perhaps read or watched badly written guides to HDR, many of which contain misleading or perhaps misguided information. I hope in this article to correct at least some of the misconceptions that exist about HDR.

SDR blacks, shadows and midrange should be no different from HDR (simulated)

Black is black

One of the most important things to understand is that in both SDR and HDR, black is black. There is no difference between HDR black and SDR black. In a perfect world the black on an SDR TV should be no different from the black on an HDR TV. But you will often hear people say that HDR blacks are blacker than SDR blacks. This simply isn’t correct.

What they are normally referring to is the simple fact that a high quality display with better contrast might do a better job of representing black than a lesser display with poor contrast. If you watch SDR on an HDR TV then the blacks will normally be at the same level as HDR blacks because the black limitation is not because of differences between the SDR and HDR standards but a difference in what the display device is capable of. The same applies to most of the shadow range.

The HDR version of the same shot will be little different, only the highlights will change (simulated)

If we move up in brightness from black there should be virtually no difference between SDR and HDR all the way up through the shadows, the mid-range, including skin tones and anything lower than the equivalent to a white piece of paper. Faces should not suddenly be dazzlingly bright just because it’s HDR, they should look little different in HDR or SDR.

Lightning in SDR (simulated), the lightning is barely brighter than the white clouds

Brighter than white

It isn’t until we get brighter than that white piece of paper that things start to become different. An SDR display will only show a fairly limited amount of extra brightness beyond white. If you are viewing in a typical living room, this is just enough to give a reasonable impression of the sky being brighter than that white paper, or in a scene where there is a lamp in a room with white walls, for the lamp to appear a little brighter than the walls. But this is just an impression, bright skies in scenes won’t be realistically bright. Lights and lamps won’t dazzle. Fire, flames and specular reflections never have that true-to-life sparkle. A modern, reasonable quality SDR TV or display will typically have a peak output of around 300 nits (1 nit = one candela per square metre).

Lightning in HDR (Simulated). The lightning as well as the brighter parts of the sky are significantly brighter than the white of the clouds

Note in the image above how the increased contrast between the bright lightning and sky helps give the impression that the whole image is more contrasty but the blacks and shadows in both images are the same.

An HDR display can however show highlights quite a lot brighter than that white piece of paper. Exactly how bright will depend on the individual display. Some can go a little bit brighter, perhaps to 400 or 500 NITs, others can go much brighter, maybe 1000 NITs or more. But more isn’t always better, and I will touch on this later. As well as brightness, contrast is also important. If you want blacks to appear dark you want a TV with good contrast. The greater the separation between light and dark, the darker the dark bits will appear.

New Sony Bravia XR HDR TV

Power and heat

Then there is the issue of power consumption and heat. There are laws and regulations that set out the maximum power a TV or monitor can consume based on the size of the screen. In addition, most consumers would not accept noisy fan-cooled screens in their living rooms. Because of this there are limits to the maximum average brightness an HDR display can show. Depending on the TV and the technology it uses, if you want to show something that’s 1000 nits, it can only be across a small part of the screen, 500 nits over a much larger part of the screen, 300 nits over the whole screen. These differences between TVs and monitors in peak brightness levels and maximum average brightness levels create all sorts of potential issues for content creators as the highlights of the same material may look quite different on different HDR TVs.

Metadata

To help minimize these differences and in an attempt to keep productions looking the way they’re intended to look, metadata is added to HDR files that describes the target average brightness – MaxFALL (Maximum Frame Average Light Level) and peak brightness levels - MaxCLL (Maximum Content Light Level). The viewer’s TV is then supposed to use this metadata to adapt its output to deliver an image as close as possible to the way it looked in the grading suite. However, different manufacturers implement these adaptations in different ways and a better HDR TV will still tend to produce an image closer to that seen in the grading suite than a lesser HDR TV.

Standards

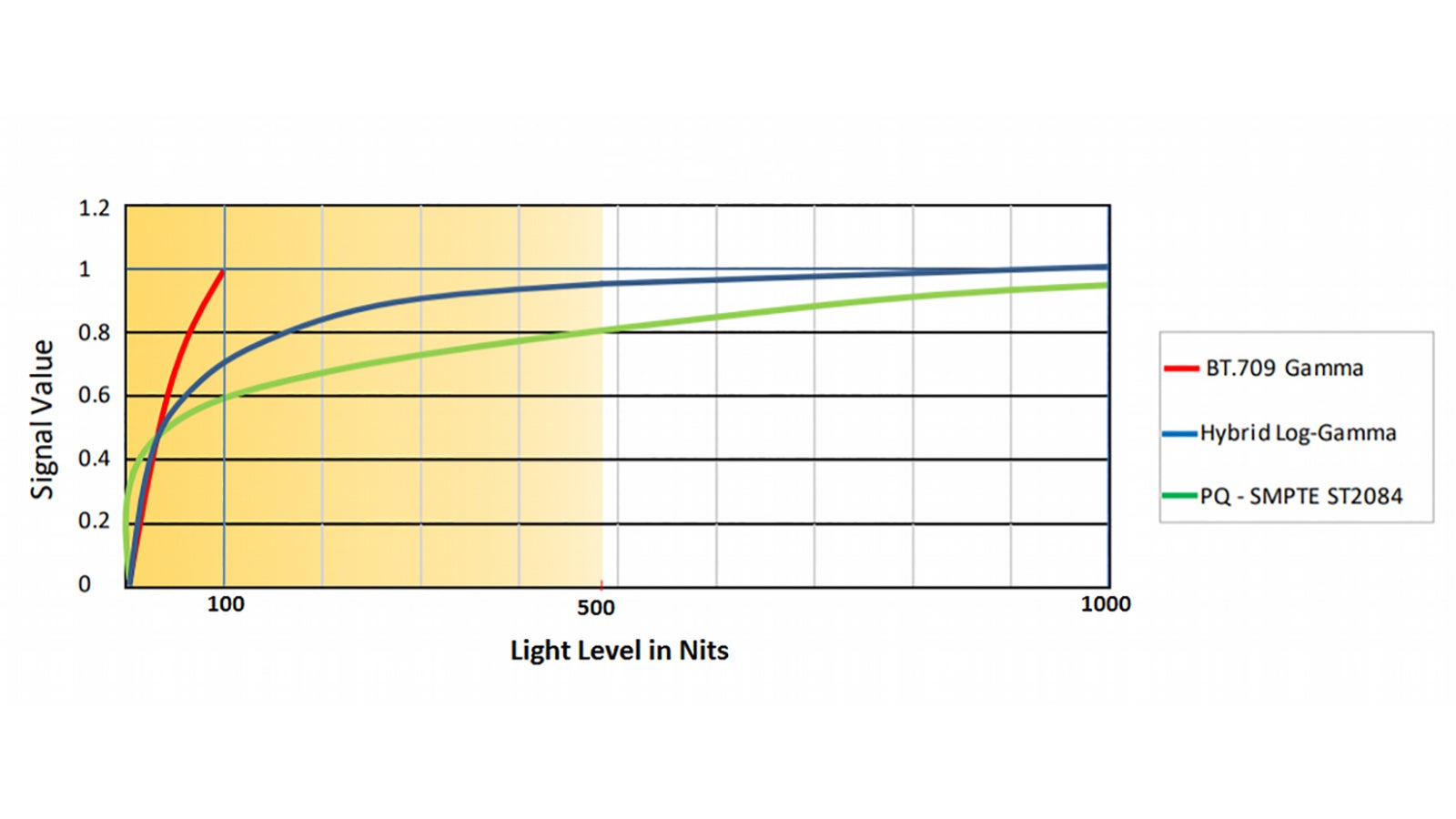

There are many HDR delivery standards, including HDR10, HDR10+, HLG and Dolby Vision. All of these, with the exception of HLG, use the same gamma curve, or more accurately - EOTF – Electro Optical Transfer Function. This is called ST2084 or PQ. In theory all the PQ-based standards can reach the same theoretical peak brightness of 10,000 nits. But the reality is that current consumer displays don’t get anywhere near that level.

ST2084

ST2084 is an absolute transfer function. This means that each input or stored value in the video file should represent a very specific on-screen brightness level. There is no allowance for differing viewing environments. For this reason, if you are setting up a grading suite for HDR it’s particularly important that you have the correct ambient light level around the grading suite monitor. Normally this will be around 5 nits, which is pretty dark. An unfortunate consequence of this is that shadows in HDR content that look great in the grading suite will often become invisible in a brighter home viewing environment where higher ambient light levels will wash them out. The TV manufacturers are aware of this and often, in an attempt to reduce this, they will “break” the ST2084 standard and adjust the darker parts of the image depending on ambient light levels as measured by light sensors in the TV.

More Metadata

The difference between each of the ST2084/PQ-based standards is in the way they use metadata. For HDR10 there is just one set of static metadata about the peak and average brightness levels for the entire file. HDR10+ adds dynamic metadata that can change for different shots or scenes. Dolby Vision also uses dynamic metadata. The Dolby Vision metadata also describes how to convert the HDR image to an SDR image for SDR viewing or distribution. By extrapolating between the SDR levels and the full range HDR levels Dolby Vision aims to produce an HDR image that should look as good as possible within the limitations of widely varying TV display panels. Again, this is all very good in theory but in reality, it adds a further way in which the colorist or directors’ artistic intentions may be unintentionally distorted between the grading suite and what the end viewer sees.

An optimized Rec-709 SDR image

HLG- Hybrid Log Gamma

The one standard I haven’t touched on yet is HLG or Hybrid Log Gamma. As its name suggests HLG is a hybrid gamma curve. The lower part of the HLG gamma curve is not hugely dissimilar to normal SDR Rec-709 gamma. The upper part of the curve is a logarithmic gamma curve designed to represent a large highlight range. The peak brightness for the most commonly used BBC version of HLG is 5000 nits, somewhat less than HDR10 but still brighter than current TVs can deliver. In all likelihood this is about as bright as you will ever want for domestic viewing anyway.

HLG is a relative display standard and it includes modifiers that can be used to adjust the shape of the curve to allow for viewing in different ambient light levels. Because of this the viewer’s perception of what is seen on the screen should actually be more consistent than ST2084/PQ across a wider range of home viewing environments. Despite some similarities to SDR Rec-709, one drawback of HLG is that the chosen brightness level for white is lower than normally used for SDR and as a result HLG content will often appear less bright than similar SDR content.

But HLG’s big selling point is that the use of a lower gamma similar to Rec-709 is that if you view HLG on a normal SDR TV it doesn’t look “wrong.” This means it is possible to use HLG for distribution to end viewers where only a single system can be used and those with SDR TVs will have an acceptable (but not optimum) SDR image while those with HDR TVs will see an HDR image. It is a bit more complex than this as there are also choices that need to be made over the color space used, but HLG does offer some degree of backwards compatibility with SDR.

The same image as above but using HLG

As seen in the image above, when viewed in SDR it looks a bit darker than the optimised Rec-709 image, but is not unacceptable. On an HDR HLG screen this image would be have similar mid range brightness to the Rec-709 version but with much brighter highlights.

Viewer Fatigue

One thing rarely discussed with HDR is eye fatigue or viewer fatigue. An SDR image fits very comfortably within the eyes’ static dynamic range (estimated to be around 12 stops). So watching a full length feature film in SDR is rarely visually tiring. But a high-end HDR display that can go all the way from extremely low brightness levels, perhaps as low as 0.005 nits, all the way up to 1000 nits, when viewed in a dark viewing environment this will start to approach the boundary of what is comfortable to watch. If a very large dynamic range is maintained over long periods it can become tiring to watch. For this reason, it is often desirable to use a smaller range for much of a longer production and only revert to using a very large dynamic ranges for short periods, perhaps for specific scenes or shots which will benefit from HDR for a more dramatic effect. Many colorists who work on HDR productions will tell you it can be much more fatiguing working on an HDR grade than an SDR grade.

Part 2 coming very soon.