11-04-2020 - Gear, Technology

Cine Lens Metadata - An Overview - With Snehal Patel of ZEISS Lenses - Part 2 - Real World Applications

By: Jeff Berlin

Snehal Patel is a film and television professional with over two decades of experience creating content and adapting new technology. He started the first Canon Bootcamp in Los Angeles during the Canon 5D DSLR craze and has over twenty years of experience in cinema.

Snehal has lived and worked in Chicago, Mumbai and Los Angeles as a freelance Producer & Director. He was a camera technical salesperson at ARRI, and currently works as the Sales Director for Cinema at ZEISS. He represents the Americas for ZEISS and is proud to call Hollywood his home.

This is Part 2, detailing some real-world and practical applications of this technology.

For more information about ZEISS cinema lenses, click HERE.

Read Part 1 of this article HERE.

Sigma Cine Primes in PL mount also include Cooke /i protocols

Jeff Berlin:

Let’s discuss how this all works in real world applications.

Snehal Patel:

Okay. Let's say you're doing a wide shot and you have to composite it with multiple plates. What you would do first is un-shade and un-distort everything and flatten everything out. Then, you would add your composite elements because you're going to drop things into the computer more squared off, not with the bend of a lens.

Once you load those flatter-looking elements and layer everything together, you then re-apply your shading and distortion characteristics over the whole final composite image. That's how you get a better composite and how everything looks more natural, like it was actually shot in camera, so that you don't have, like, an A cam shot of your closeup looking vastly different from your VFX shot, that followed right afterwards. The two should be seamless and look like they came from the same camera and lens combination.

That's how VFX works in this regard, so the workflow does not change. And ZEISS provides free plugins for NUKE and for After Effects for this purpose.

Jeff:

Is ZEISS extended data the same language as Cooke/i?

Snehal:

Yes, we're a Cooke/i technology partner. We are using their protocol and adding some optional information, manufacturer specific information for example.

Jeff:

So we will find this functionality in the ZEISS Supreme Primes and the Radiance, obviously, but what others?

Snehal:

It’s in the ZEISS CP.3 XD as well. And the Premista Zooms from Fujinon also support the ZEISS XD technology.

Jeff:

Ah, nice. I’m hearing a lot of good things about those lenses.

Snehal:

This way, they, Fujinon, don't have to have a full infrastructure. We have the free plugins and post tools. There is on-set software that lets you see the shading and distortion characteristics. We have the Live Grade and Silverstack applications, which is Pomfort software for DITs that lets you see the data and test it and make sure it's working correctly, and to extract it to use for other purposes.

Jeff:

So how do we benefit from this on set?

Snehal:

On set, the main thing is to make sure you're capturing the data. Other than that, it’s not really an on-set thing unless you're doing virtual production. For virtual production, you need this data in real-time because what ends up happening is if you have a wide angle lens and you're doing the new Bugs Bunny basketball movie, Space Jam, you have animated characters standing next to live actors or basketball stars. This is a very common thing with virtual production. You have AR [augmented reality] objects or background objects, or you're standing on a floor. Now, if you're standing on a real floor in real life, if I put a wide angle 18mm lens on the camera, that floor bends. Actually, that floor appears to be lower at the apex, at the bottom than it is in real life, because of the curvature of the lens. The further you are away from the center of the lens, your corners will bend even more, as will your walls.

Now, if I add a background object and my background is flat but my foreground is bent, it's going to look odd, right? Because any poles or anything I have in the foreground is going to look like it's bending, because they are real objects, but everything else in the background is not bending, not real, so it doesn't look like it matches the lens.

Also, let's say you created a cartoon character standing next to you, or jumping around you, it won't be standing on the ground. They'll be floating maybe an inch off the ground. Their feet won’t appear to be touching the ground because we haven't accounted for the distortion. Not only do you want to track the shading and distortion characteristics, but you need to apply them in real time, through the Unreal Engine, for example, in virtual production, otherwise you can't line up your objects.

Normally, what they do is take half an hour to forty-five minutes to calibrate a lens. It's a long process. They put each lens through the process, and note the serial number, to track exactly what characteristics it's giving the camera, so that it works correctly. We're giving you those characteristics agnostic of the camera.

And that's the best part. In our system, we give you the data based on what the lens is doing for the whole image circle. Then, based on your sensor size, you can calculate what it’s doing based on your camera setting. That's what the plugins and software do. Right now, we've already started working with a couple of virtual production companies, like Mo-Sys and NCam, to integrate this direct translation into their hardware so you no longer have to calibrate lenses. But we're now also working with Epic and Unreal Engine so that we can just feed the data directly to Unreal Engine and it could spit out the changes for you.

Let’s forget about shading and distortion data for a minute and instead look at some more simple things, like how this data is used for the TV show Mandalorian. You need to be able to have the exact focus and iris distance from the lens in real time going into your system in the Unreal Engine, where you're creating backgrounds, so you automatically know exactly what the depth of field is, instead of best-guessing and then letting the DP decide what it should be.

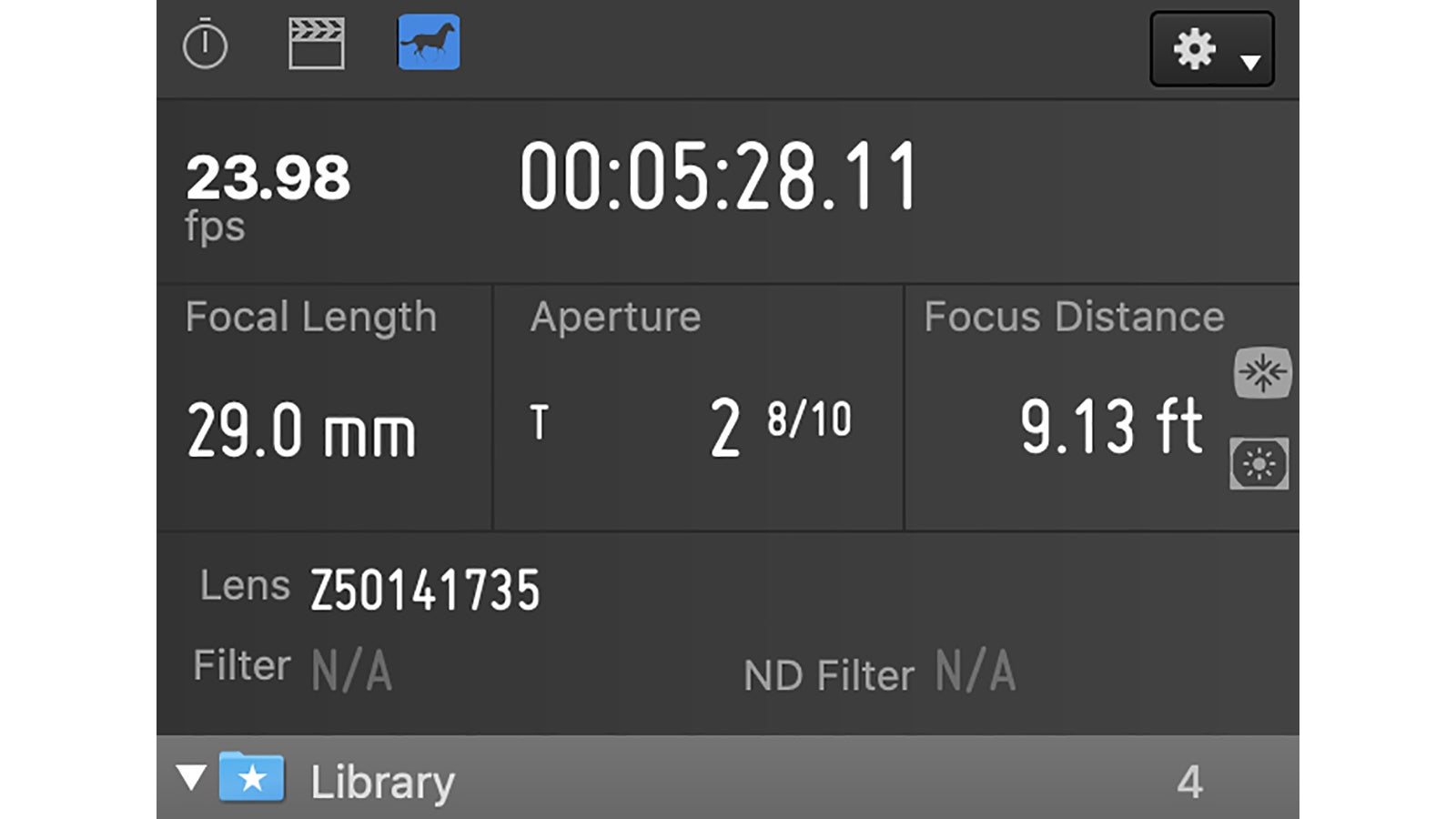

Some of the data sent by the lens

Snehal cont'd:

This is why lens metadata is important, because it's not just for the backend. Believe me when I say that, for $300 million a picture, you could save millions of dollars if you have more data in visual effects, it's just how it works. But even in virtual production, it's necessary now to have even better data, better communication between camera lenses and the backend, the computer systems, to get a more accurate image upfront because that's what it's all about. In virtual production, it's about skipping the backend.

It’s also important for volumetric shooting. Volumetric shooting is when you have objects or people in the middle of a space with cameras surrounding that object, capturing an image from all angles and recreating it in 3D. By having your shading and distortion characteristics, you can stitch together this 3D object much more accurately with AI software. There're are so many applications for this.

What we've done at ZEISS is made smart lenses really simple, making it useful for all kinds of different camera sensors. Based on the focus and iris position, the lens will tell you what the lens characteristics are in real-time over a large image circle that is bigger than the camera sensor. Then, the backend, the plugins and the software will calculate, based on the exact sensor size you used for that project, what your output is going to be. If your camera doesn't record all this data directly, because that's also the way it tracks what sensor size it's using, you could specify the sensor size on the back end, if need be.

Ambient MasterLockitPlus

Jeff:

Do ACs use any of this data when they're pulling focus?

Snehal:

DPs and ACs have been using smart lenses like crazy because on your wireless systems, if it's a smart lens, you don't have to program it. You can either plug a cable into it, or your camera tells you focus and iris distance automatically.

Then there are some DPs who are fanatical about keeping track of their looks, who do screen grabs of their setups, of their monitor output from the cameras that tells them on screen the color temperature, ISO, and focus and iris position, because all the modern cameras have monitor outputs that give you the smart lens info too.

This is good because if you have to do setups over multiple days, you know exactly what you did earlier and you can match what you did much easier. You don't have to guess on Thursday what lighting set up you did that previous Monday, you can just go back to it in the data and see it. So having that smart data helps you as a DP as well, because even six months later you can go back and match a shot exactly, because you’ve got 40 minutes with Charlize Theron for a feature that is supposed to release next week and you guys had to shoot a pickup for a scene - you needed that one shot and you needed it to match what you did previously.

So how do you match that one shot with everything else you did? If you knew what your focus distance was, if you knew what your iris was, you're halfway there because then you can look at a shot, replicate your lighting and you're done, you can match the shot. Having just this info, focus, iris, zoom, is valuable, but on top of that, having shading and distortion characteristics takes it to a whole new level for visual effects, for virtual production, 3D capture, everything else.

Jeff:

What about recording externally?

Snehal:

If you need to record externally, you can use a MasterLockitPlus timecode and metadata box from Ambient, or you can use a five inch Transvideo StarliteHD-m monitor that captures data as well.

Transvideo StarliteHD-m monitor